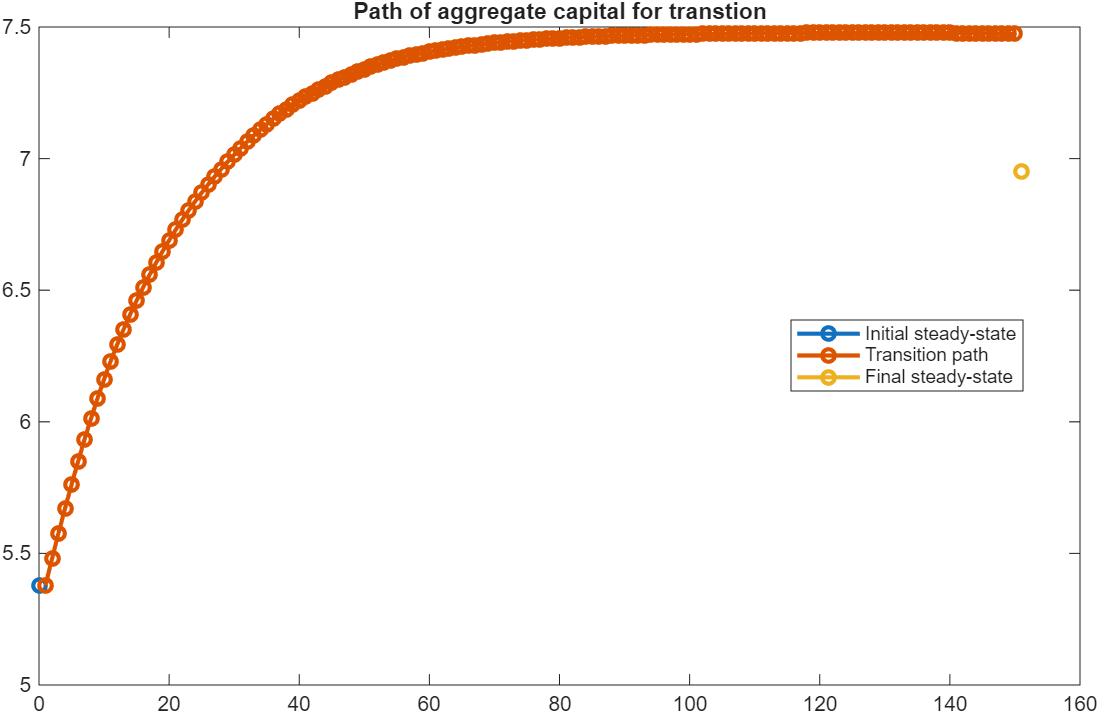

I think the comments in the Aiyagari example with Transition are not consistent with the code.

The comments say that there is a change in beta (discount factor), while the code change alpha (capital share parameter in Cobb-Douglas).

As a suggestion, perhaps it would be more interesting to show a change in a tax rate, since this is the typical application of transitions in Bewley models. To keep the example simple, I would assume that there is a linear tax rate on capital income which is used to finance a lump-sum transfer. In this way, you don’t need a government budget constraint in the code.

Households budget constraint:

c + a' = (1+r_t(1-\tau))a +w_t z + T_t, \quad a'\geq 0

where \tau is the tax rate on capital income and T is the lump-sum transfer.

Implicitely, the government budget is

T_t = \tau_t r_t K_t

Then the experiment would be to compute the transition after the tax rate increases from, say, 20% to 40%. The code would show the evolution over time of aggregate capital, output and some measure of inequality like the Gini of assets.

This is just an idea ![]()