There is a very interesting paper by Eugene Tan (University of Toronto), “A Fast and Low Computational Memory Algorithm For Non-Stochastic Simulations in Heterogeneous Agent Models”, published in Economic Letters, link here

The paper provides a new method to compute the stationary distribution in heterog agents economies, which is faster and less memory-demanding than the textbook method (as described e.g. in Liunqvist and Sargent textbook).

In a nutshell, the standard method relies on computing a big transition matrix that gives the probability of moving from point (a,z) today to point (a’,z’) tomorrow, where a denotes the endog state and z is the exog state (I follow the standard notation of the toolkit). Unfortunately this object is often large (even if it is built as a sparse matrix) and can become not manageble if the state space is too big.

Eugene’s method instead split the problem in two parts: first, compute the transition operator from (a,z) to (a’,z), fixing z at today’s level. Then do a matrix multiplication to update z using the exogenous transition matrix pi_z. The paper provides a great explanation and offers much more detail than I can do here of course.

I have implemented Eugene’s method in Matlab within the toolkit. I modified a few lines of the toolkit function StationaryDist_Case1.m (see code below). Then I added the function StationaryDist_Case1_Iteration_raw_AD. If the user selects

simoptions.method = ‘AD’

then the toolkit will call the new function StationaryDist_Case1_Iteration_raw_AD and solve the distribution using the new method. If instead the user writes

simoptions.method = ‘RK’

the toolkit will call the function StationaryDist_Case1_Iteration_raw and use the standard method.

It is important to initialize simoptions.method to either ‘AD’ or ‘RK’, otherwise the code will throw an error.

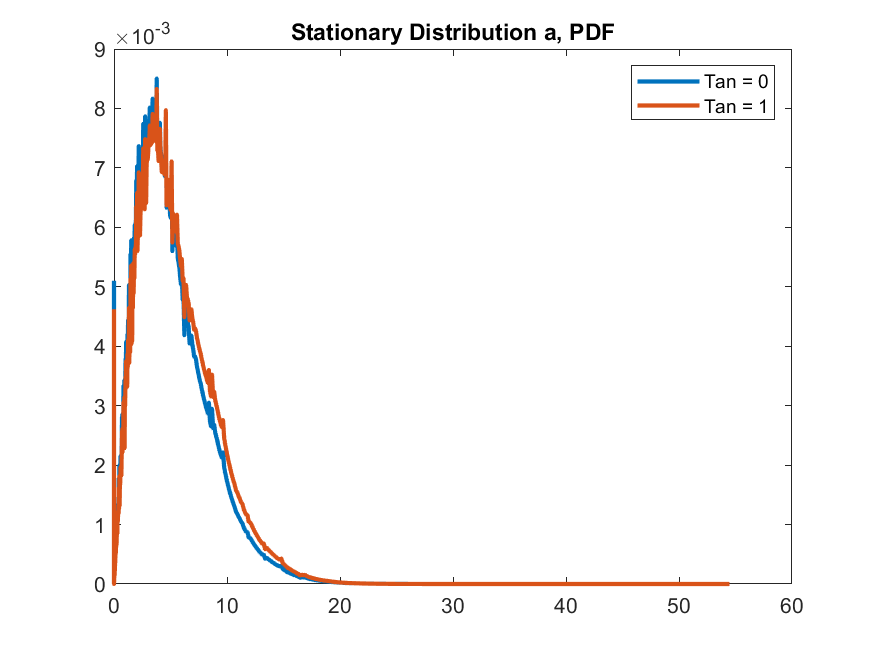

I provide an example using Aiyagari’s model on my github page

I rely on Robert’s Aiyagari example and show that the new method is about 12 times faster than the standard method, given a parametrization with n_a=512 and n_z=21, everything else as in Robert’s example.

A disclaimer: my code is tested only for the simplest case with infinite horizon, no d variable, no semi-endogenous shocks, etc. (so, basically, Aiyagari 1994).

Let me know what you think!

Here is the modification to the toolkit function StationaryDist_Case1.m

%% Iterate on the agent distribution, starts from the simulated agent distribution (or the initialdist)

if simoptions.iterate==1

if strcmp(simoptions.method,‘RK’)

StationaryDistKron=StationaryDist_Case1_Iteration_raw(StationaryDistKron,PolicyKron,N_d,N_a,N_z,pi_z,simoptions);

elseif strcmp(simoptions.method,‘AD’)

StationaryDistKron=StationaryDist_Case1_Iteration_raw_AD_vec(StationaryDistKron,PolicyKron,n_d,n_a,n_z,pi_z,simoptions);

end

end